GAMES101 Lecture 19 - Cameras, Lenses and Light Fields

Imaging = Synthesis + Capture

I. Cameras, Lenses

Cross-section of Nikon D3, 14-24mm F2.8 lens

Pinholes & Lenses form images

Shutter exposes sensor for precise duration

Sensor accumulates irradiance during exposure

The sensor records irradiance, and therefore all pixel values would be similar

If the sensor records radiance, then we can form an image without lenses/pinholes by capturing light from programmed direction

Pinhole Image Formation

No depth of focus - captures the entire 3D scene sharply and without blur

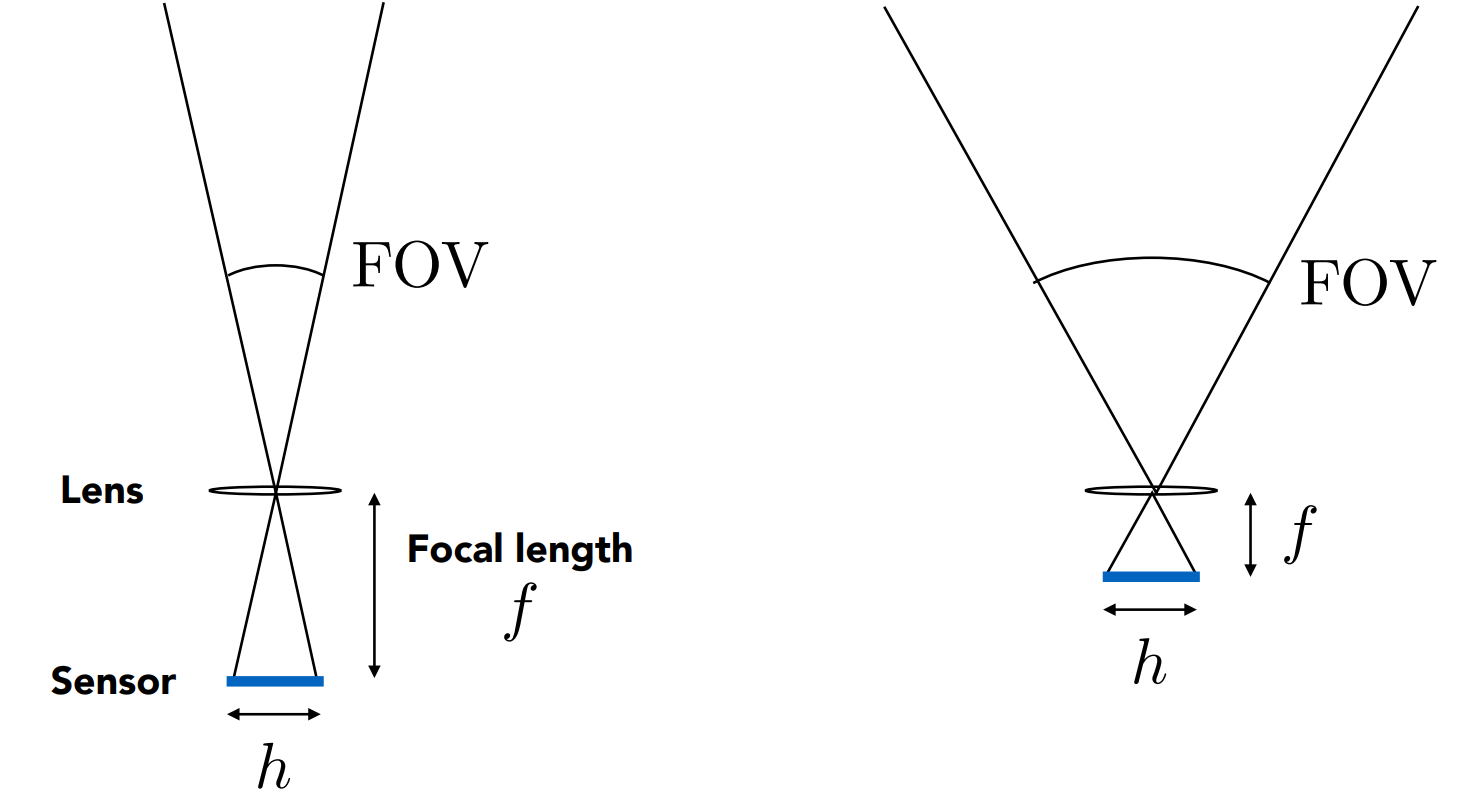

Field of View (FOV) and Focal Length

Field of View

Pinhole imaging

For a fixed sensor size

Focal Length

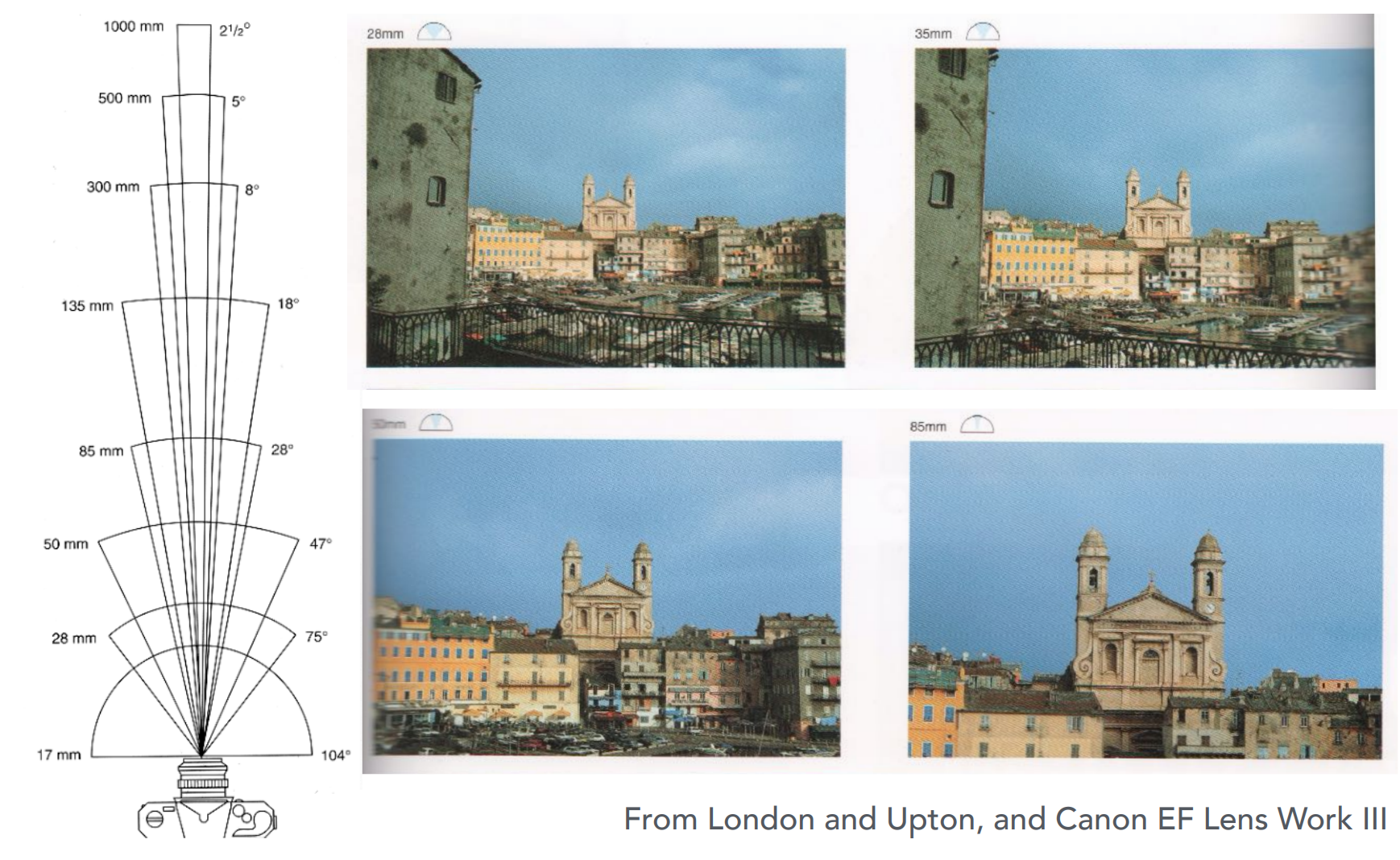

For historical reasons, it is common to refer to the angular field of view by focal length of a lens used on a 35mm-format film (36x24 mm)

When we say current cell phones have approximately 28mm "equivalent" focal length, this uses the above convention.

Examples:

Focal length -> Diagonal FOV

Normally we fix the size of sensor for convenience, but in fact they should all be taken into consideration.

Sensor Sizes

Credit: lensvid.com

Exposure

Definition: Exposure is the product of time and irradiance.

Controlled by shutter

Power of light falling on a unit area of sensor

Controlled by lens aperture and focal length

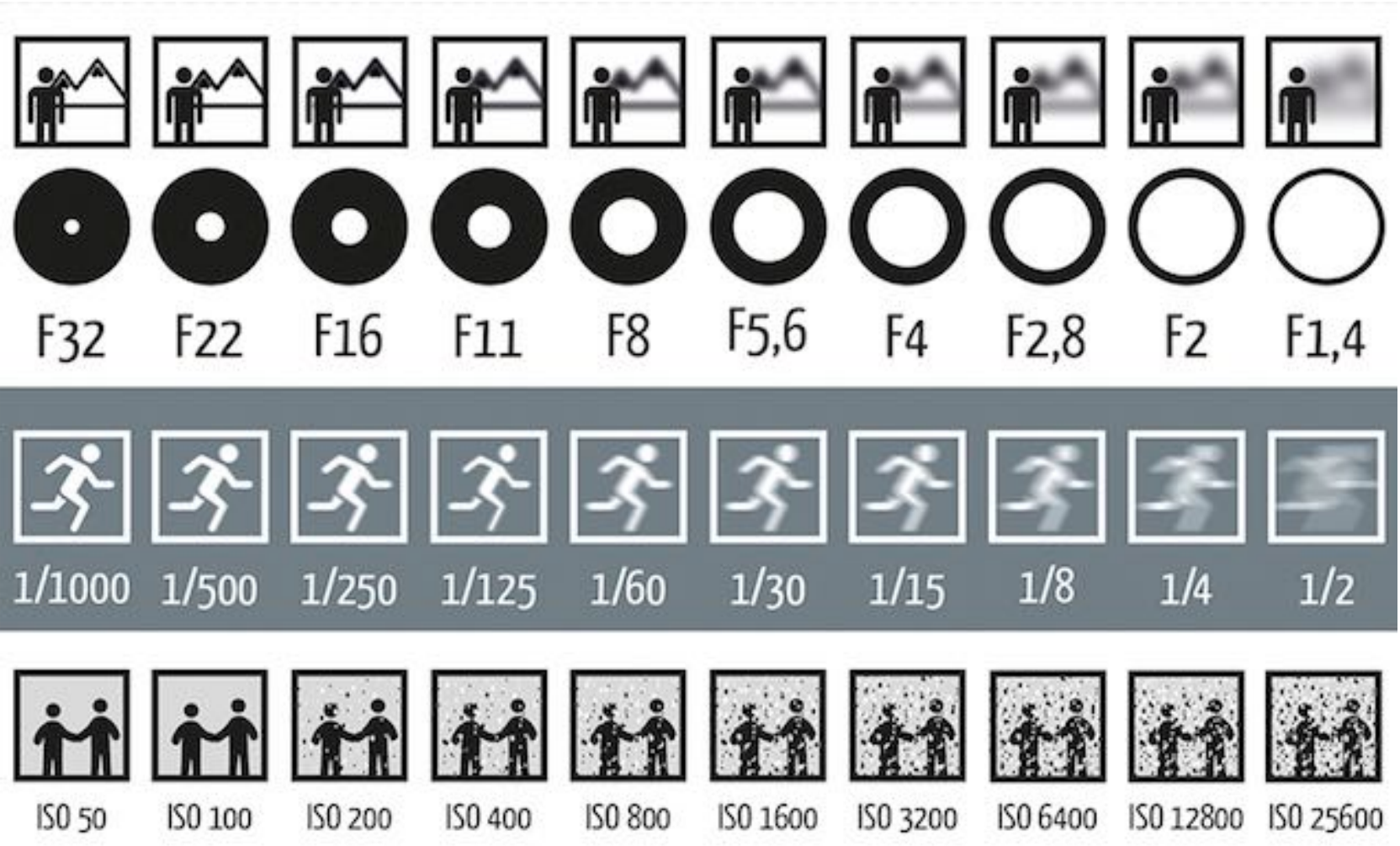

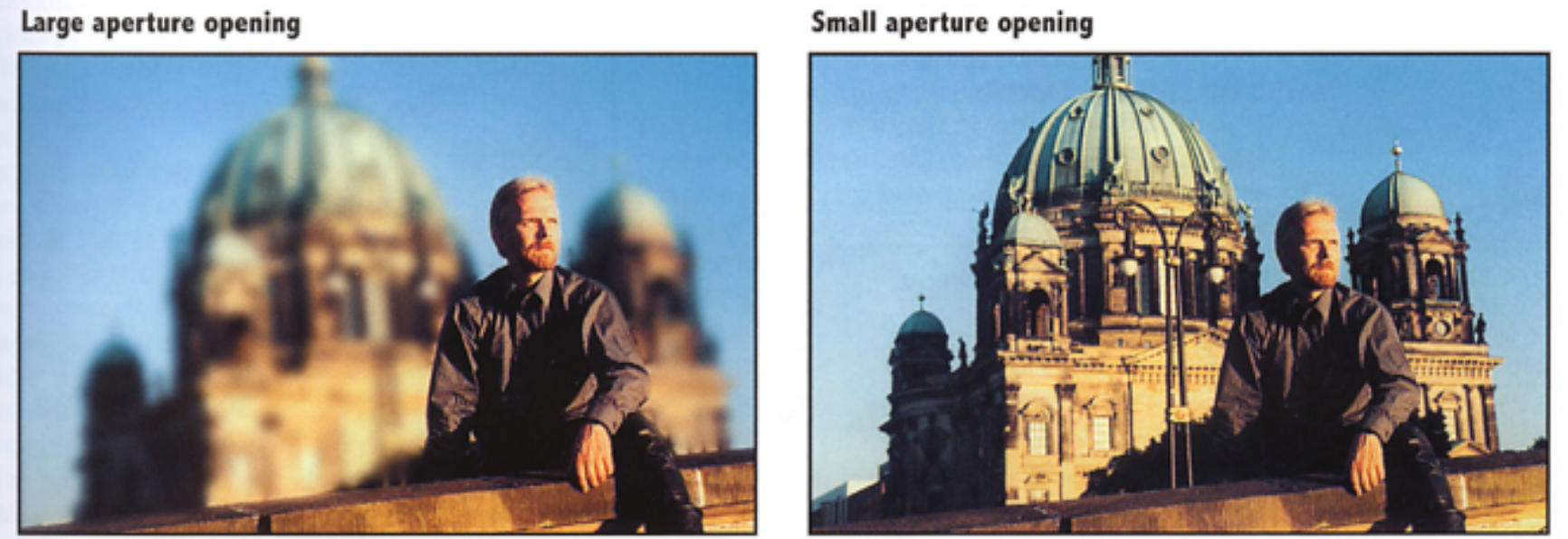

Exposure Control in Photography

Aperture size

Change the f-stop by opening/closing the aperture (if camera has iris control)

Shutter speed

Change the duration the sensor pixels integrate light

ISO gain

Change the amplification (analog and/or digital) between sensor values and digital image values

From top to bottom: aperture size, shutter speed, ISO gain

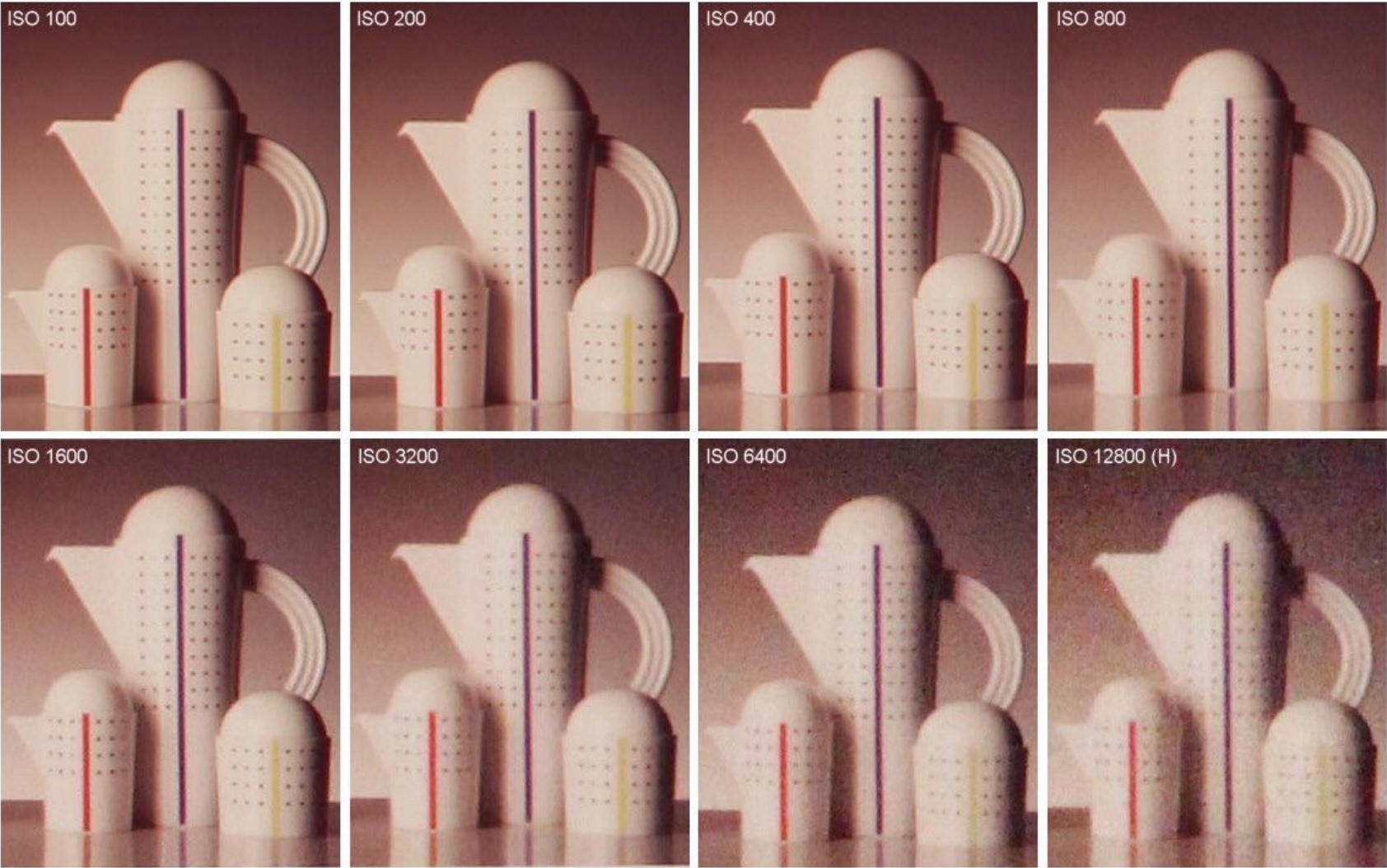

ISO (Gain)

Third variable for exposure

Film: trade sensitivity for grain

Digital: trade sensitivity for noise

Multiply signal before analog-to-digital conversion

Linear effect (ISO 200 needs half the light as ISO 100)

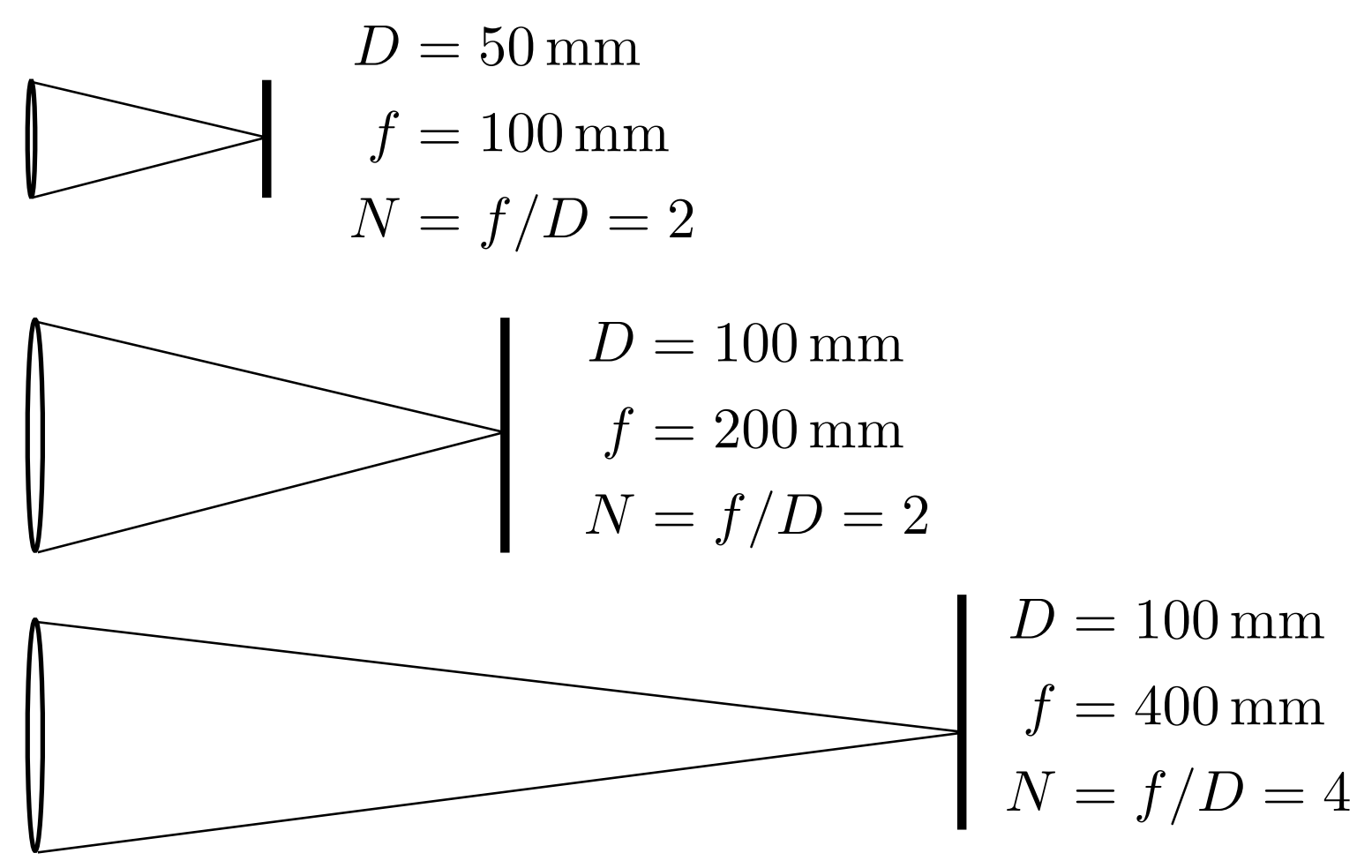

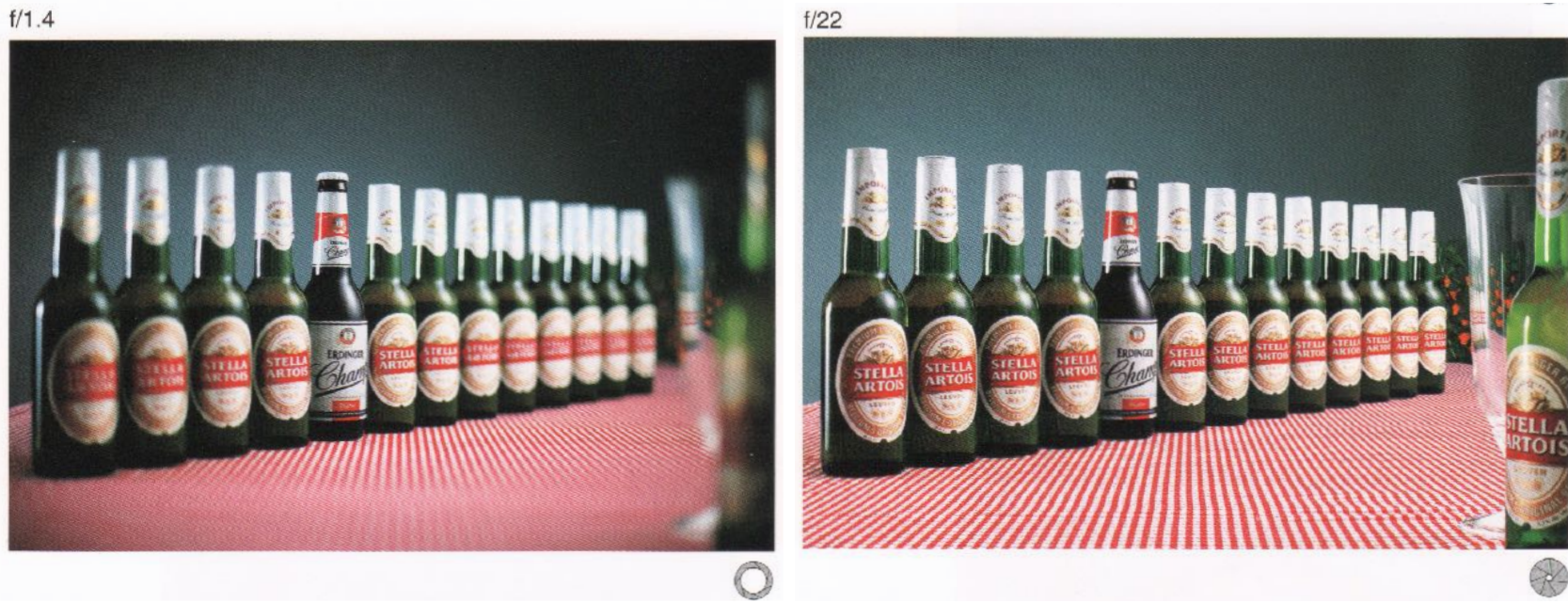

F-Number (F-Stop): Exposure Levels

Written as FN or F/N, where

Definition: The f-number of a lens is defined as

which is the focal length

An f-stop of

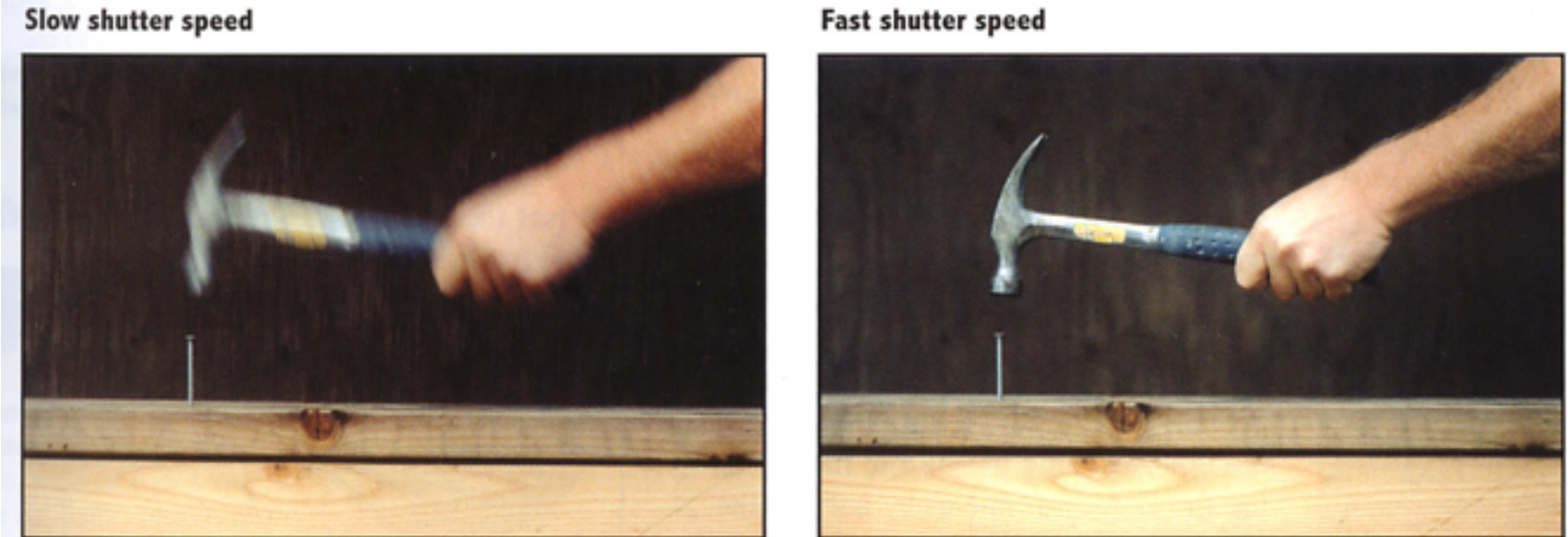

Shutter Speed

Motion Blur: handshake, subject movement

Doubling shutter time doubles motion blur

Rolling shutter: while the shutter is moving, different parts of photo is actually taken at different times

May also be caused during the imaging process, where the CMOS stores data linearly (doesn't capture every pixel simultaneously)

Constant Exposure: F-Stop vs Shutter Speed

| F-Stop | 1.4 | 2.0 | 2.8 | 4.0 | 5.6 | 8.0 | 11.0 | 16.0 | 22.0 | 32.0 |

|---|---|---|---|---|---|---|---|---|---|---|

| Shutter | 1/500 | 1/250 | 1/125 | 1/60 | 1/30 | 1/15 | 1/8 | 1/4 | 1/2 | 1 |

These combinations gives equivalent exposure.

For moving objects, photographers must trade off depth of field and motion blur

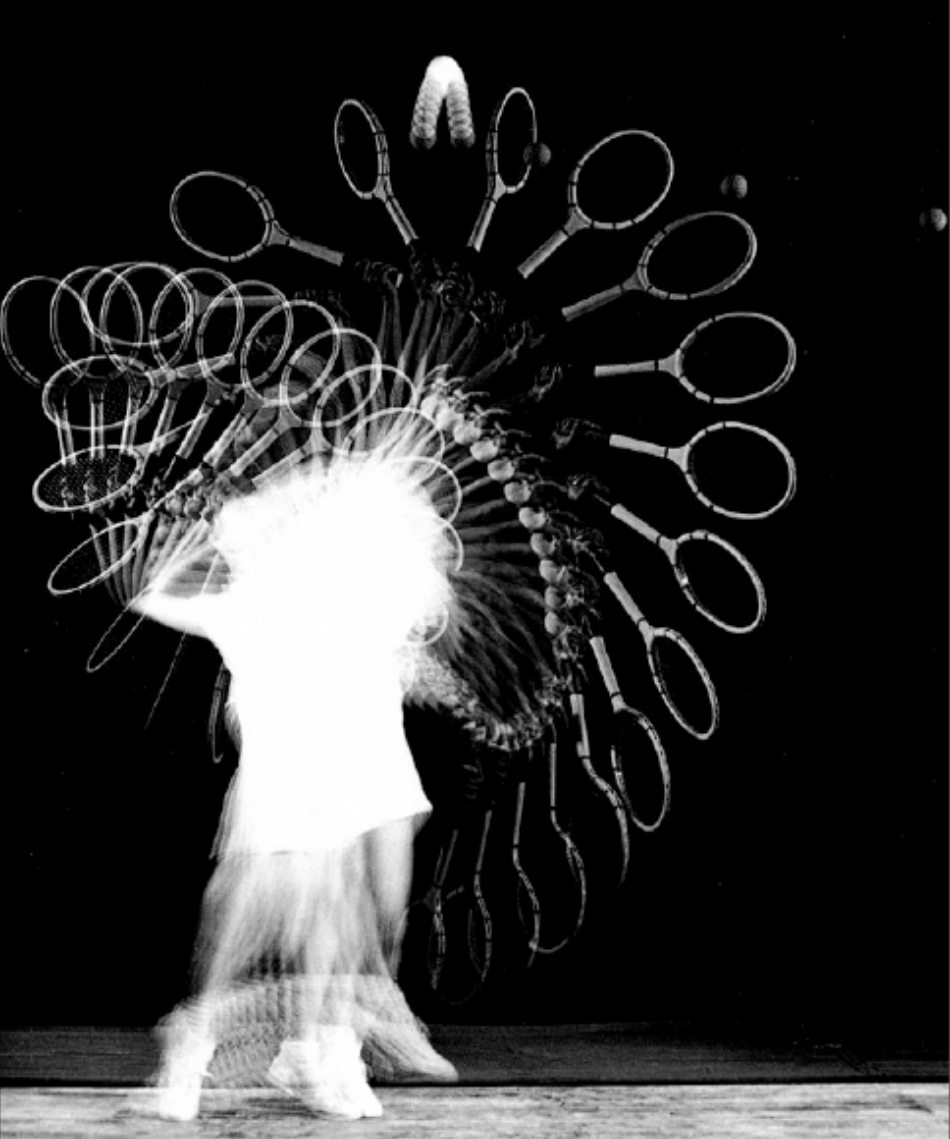

Fast/Slow Photography - Applications

High-Speed Photography

Normal exposure =

extremely fast shutter speed, times

large aperture and/or high ISO

Long-Exposure Photography

Thin Lens Approximation

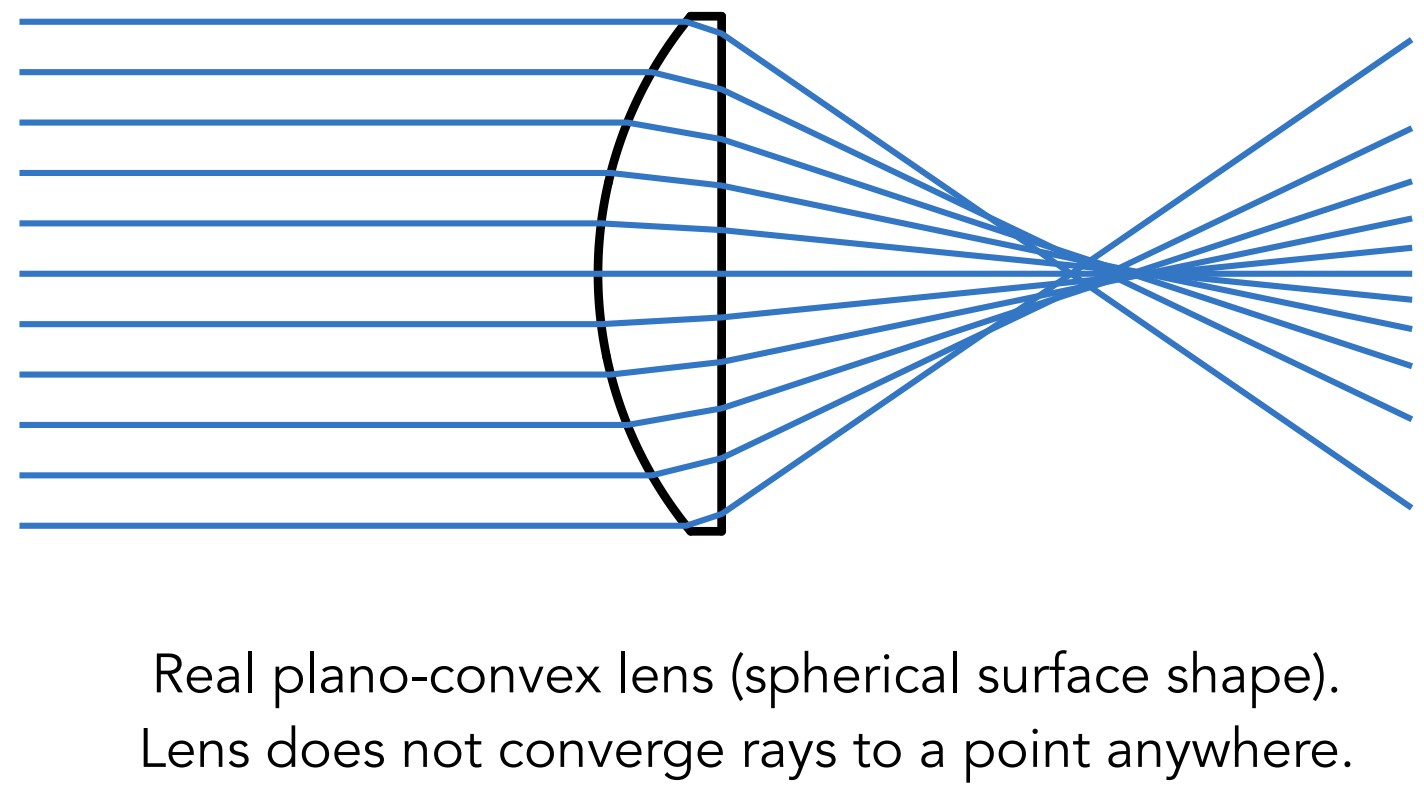

Real lens designs are highly complex.

Real Lens - Aberrations

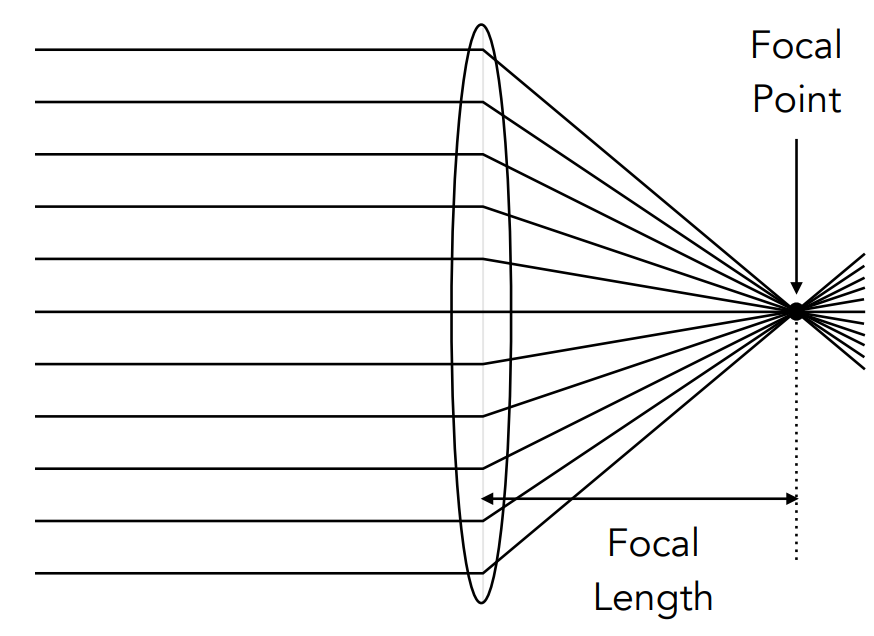

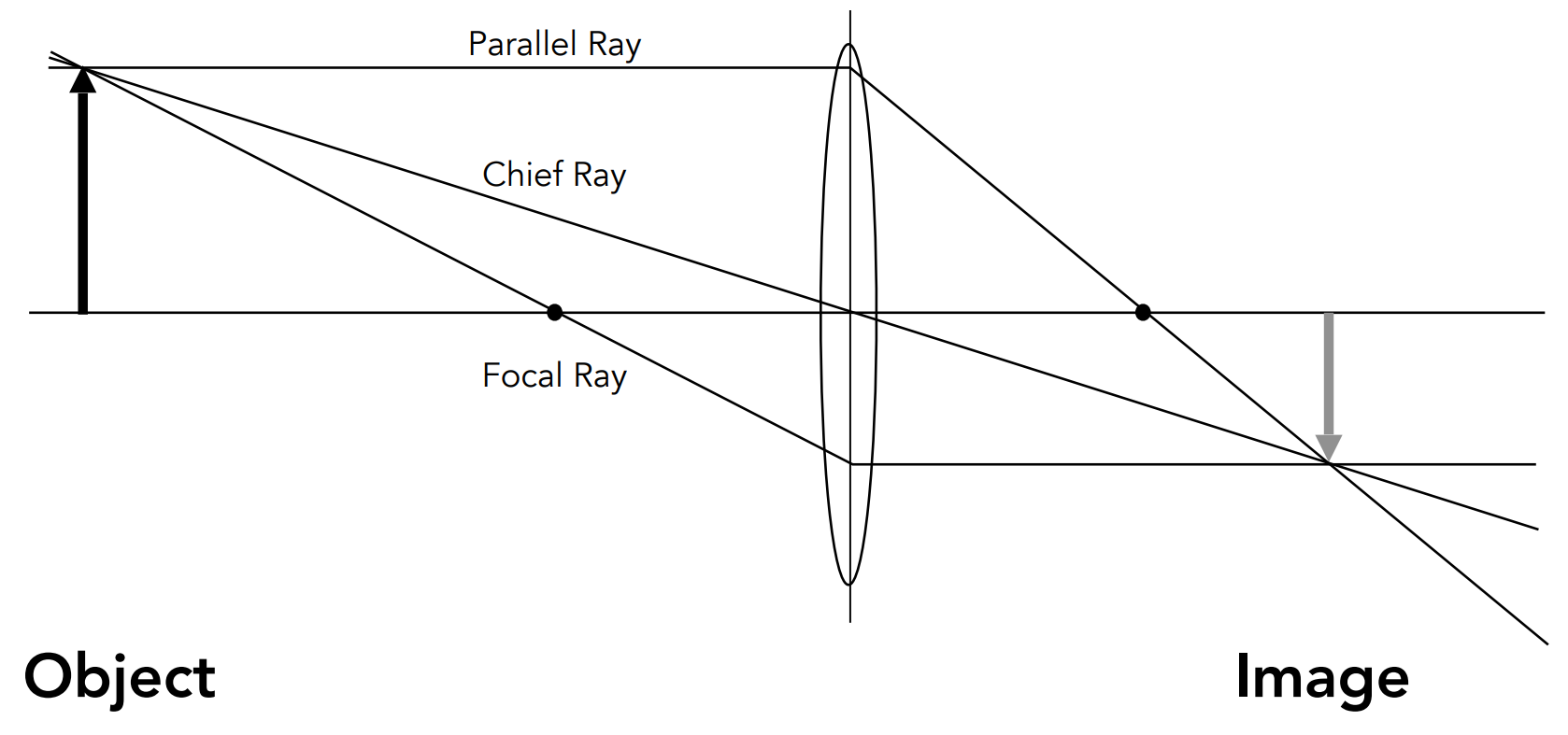

Ideal Thin Lens

All parallel rays entering a lens pass through the focal point of that lens

All rays through a focal point will be in parallel after passing the lens

Focal length can be arbitrarily changed (using a zoom lens)

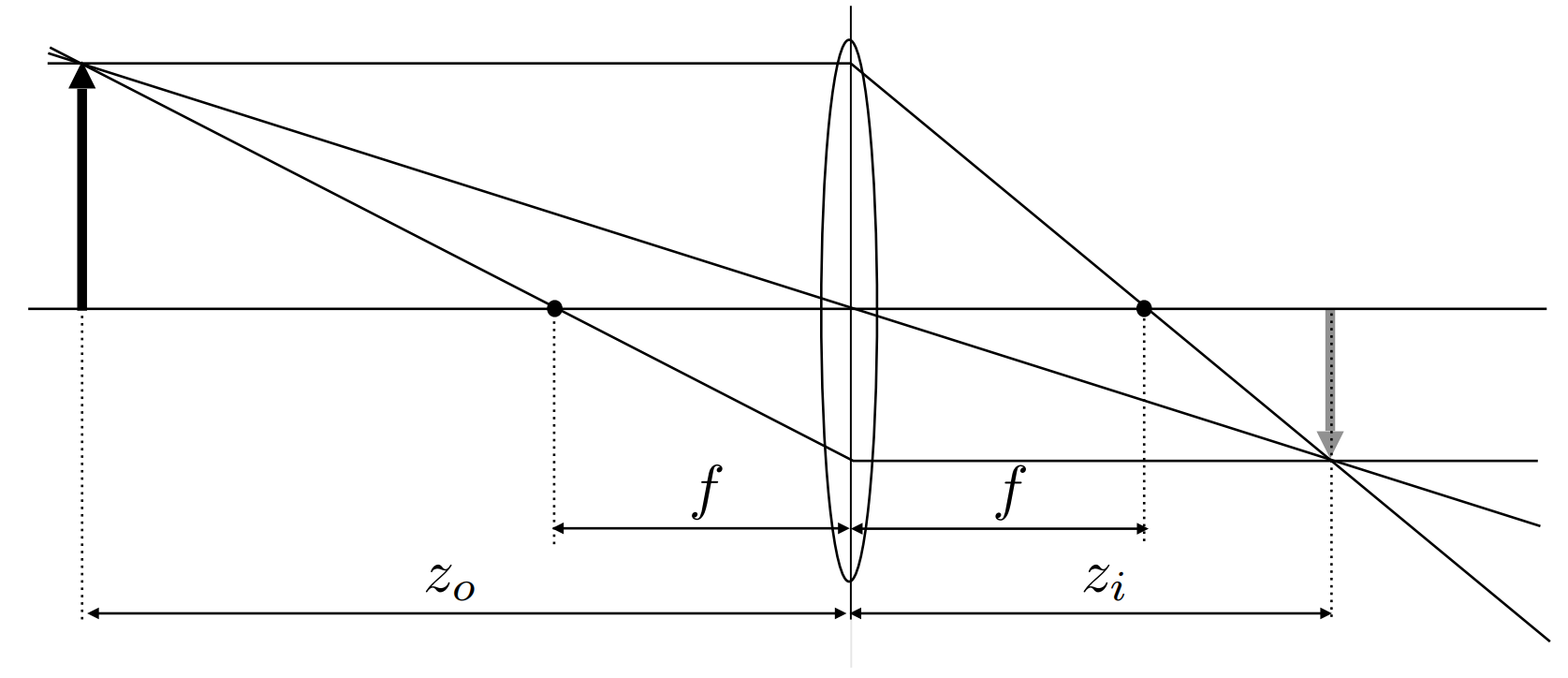

The Thin Lens Equation

Gaussian Thin Lens Equation:

For ideal lens only.

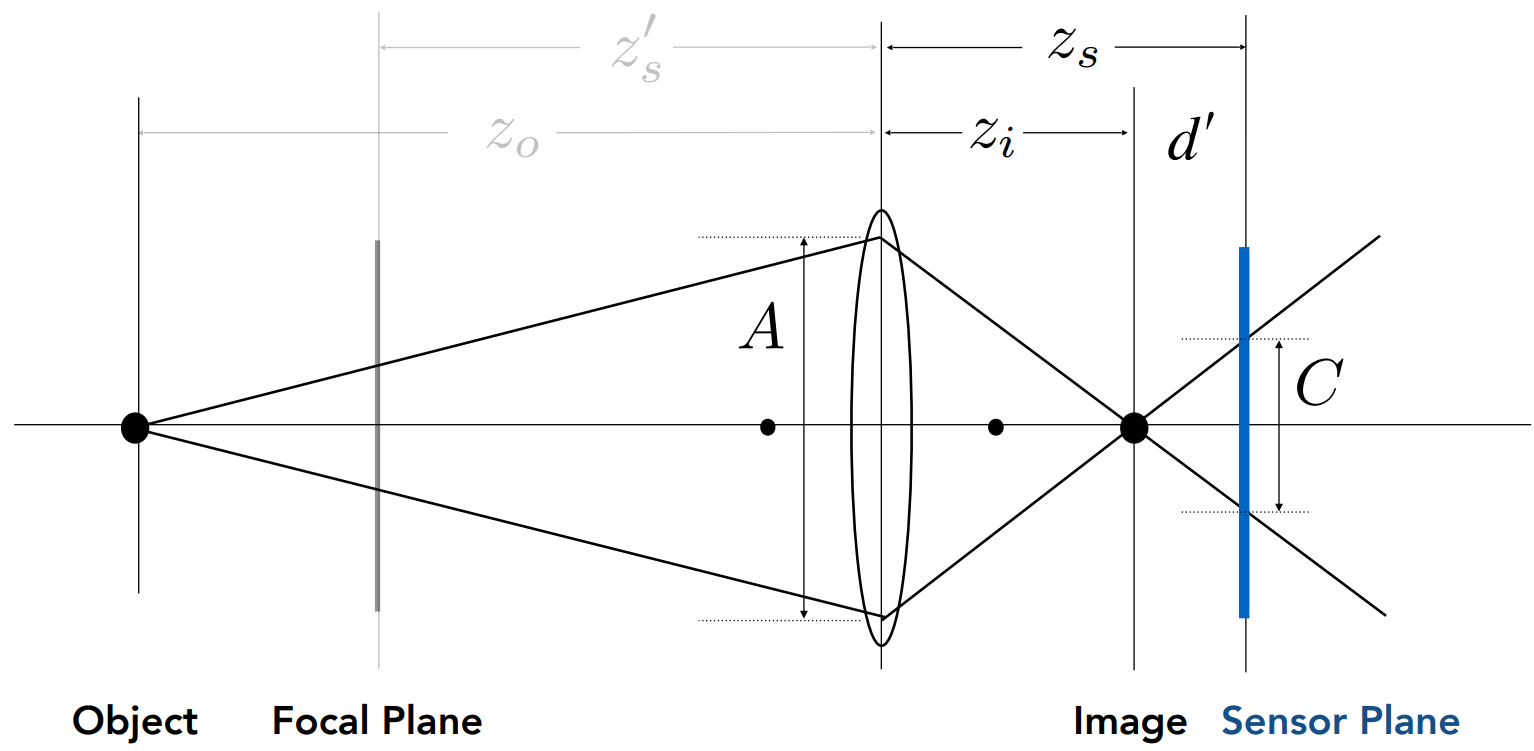

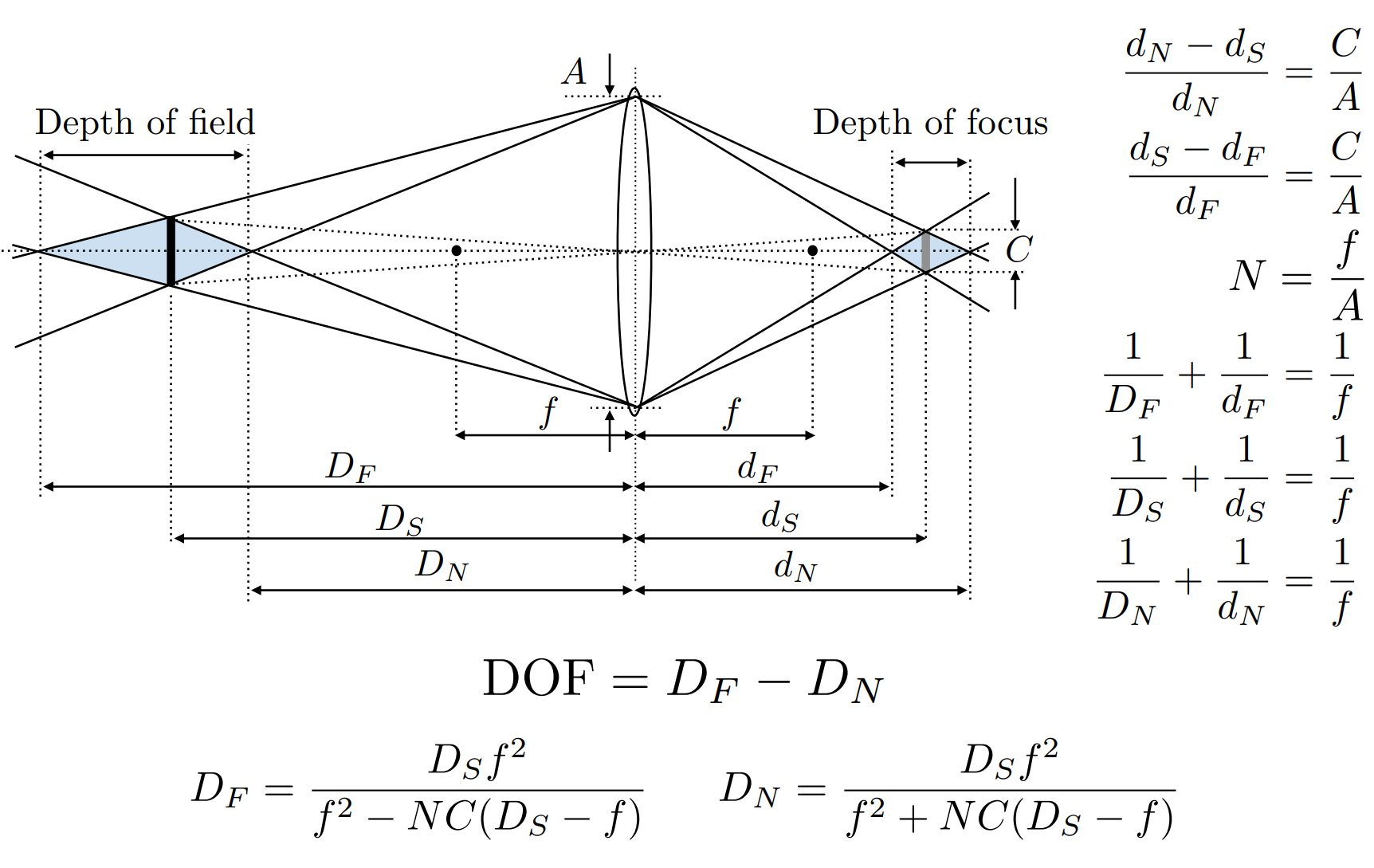

Defocus Blur

Circle of Confusion, CoC: an optical spot caused by a cone of light rays from a lens not coming to a perfect focus when imaging a point source.

Proportional to the size of the aperture

F-Numbers

View the Exposure section.

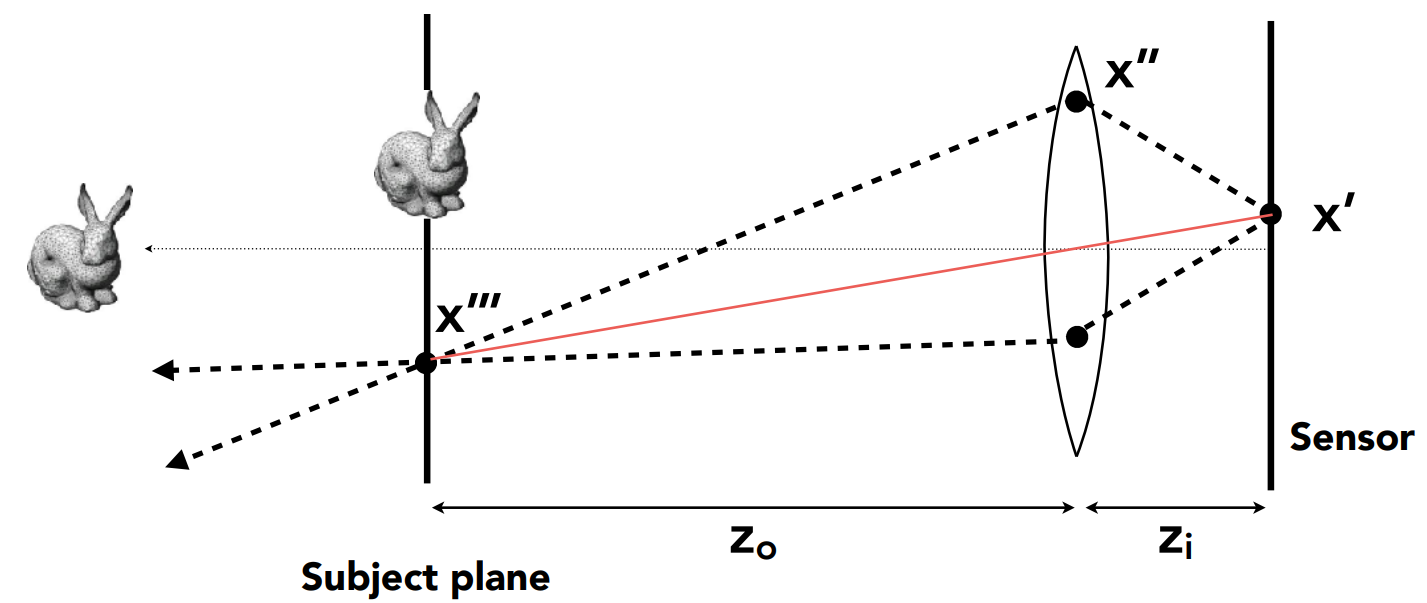

Ray Tracing Ideal Thin Lenses

Setup

Choose sensor size, lens focal length

Choose depth of subject of interest

Compute corresponding depth of sensor

Rendering

For each pixel

Sample random points

Since the ray passing through the lens will hit

Consider the virtual ray connecting

Estimate radiance on ray

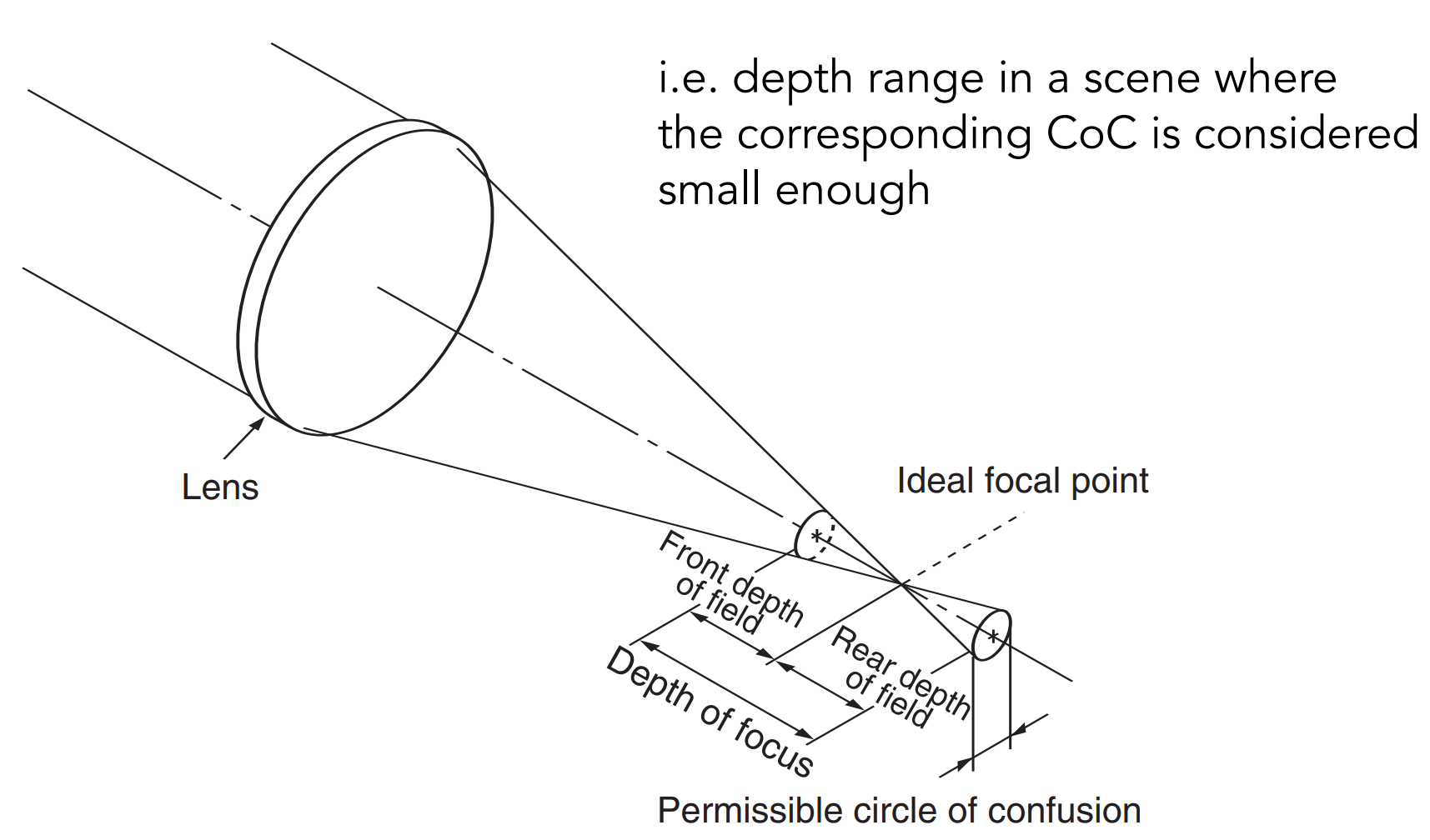

Depth of Field

Set the CoC as the maximum permissible blur spot on the image plane

Such that they will appear as a single pixel finally

Depth of Field: Depth range in a scene where the corresponding CoC is considered small enough

http://graphics.stanford.edu/courses/cs178/applets/dof.html

II. Light Field/Lumigraph

The Plenoptic Function

Definition: The Plenoptic function describes the intensity of light viewed from any point, to any direction, at any time:

where

Grayscale snapshot:

Color snapshot:

Movie:

Holographic movie:

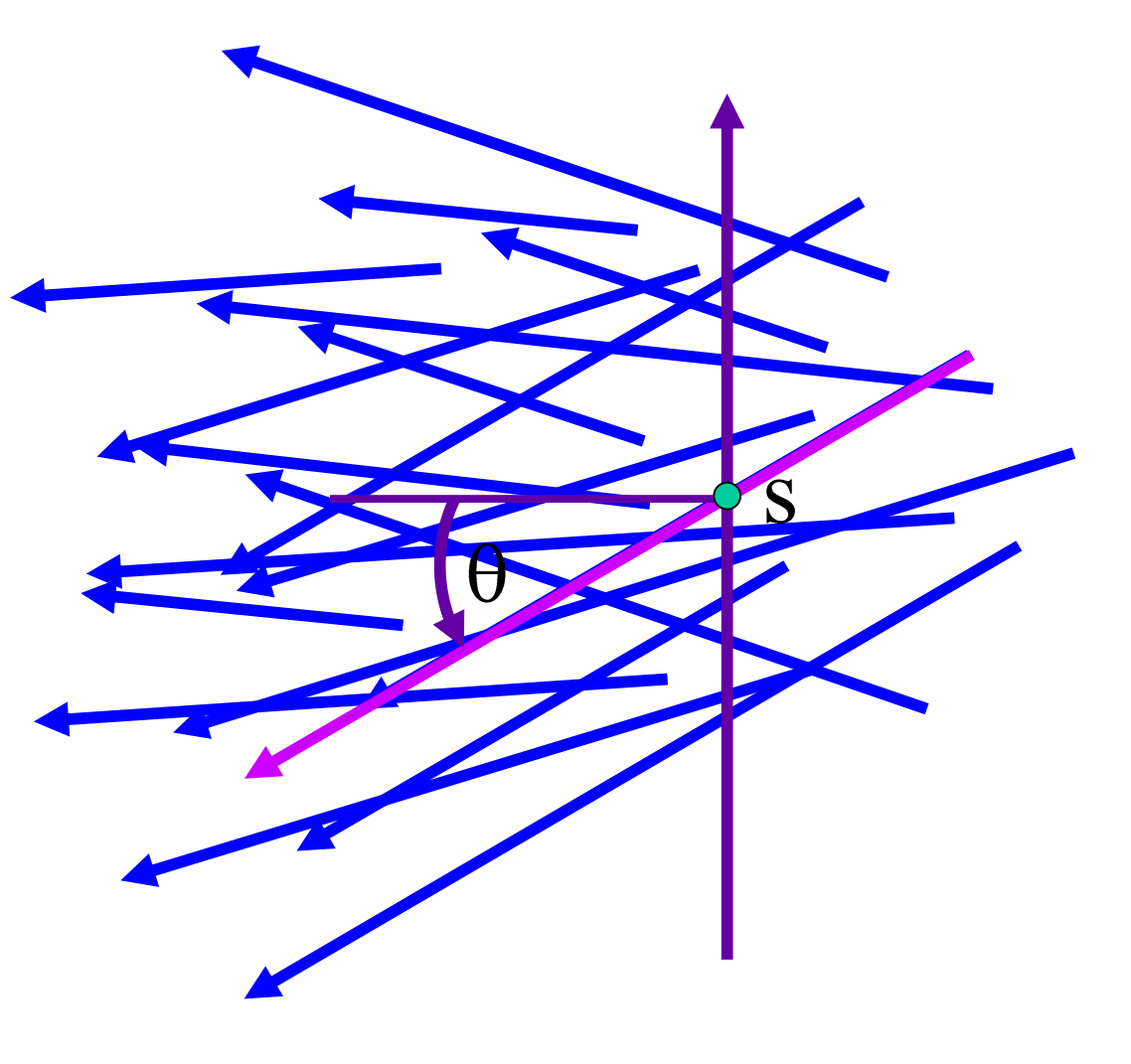

Definition: A ray is defined by

where

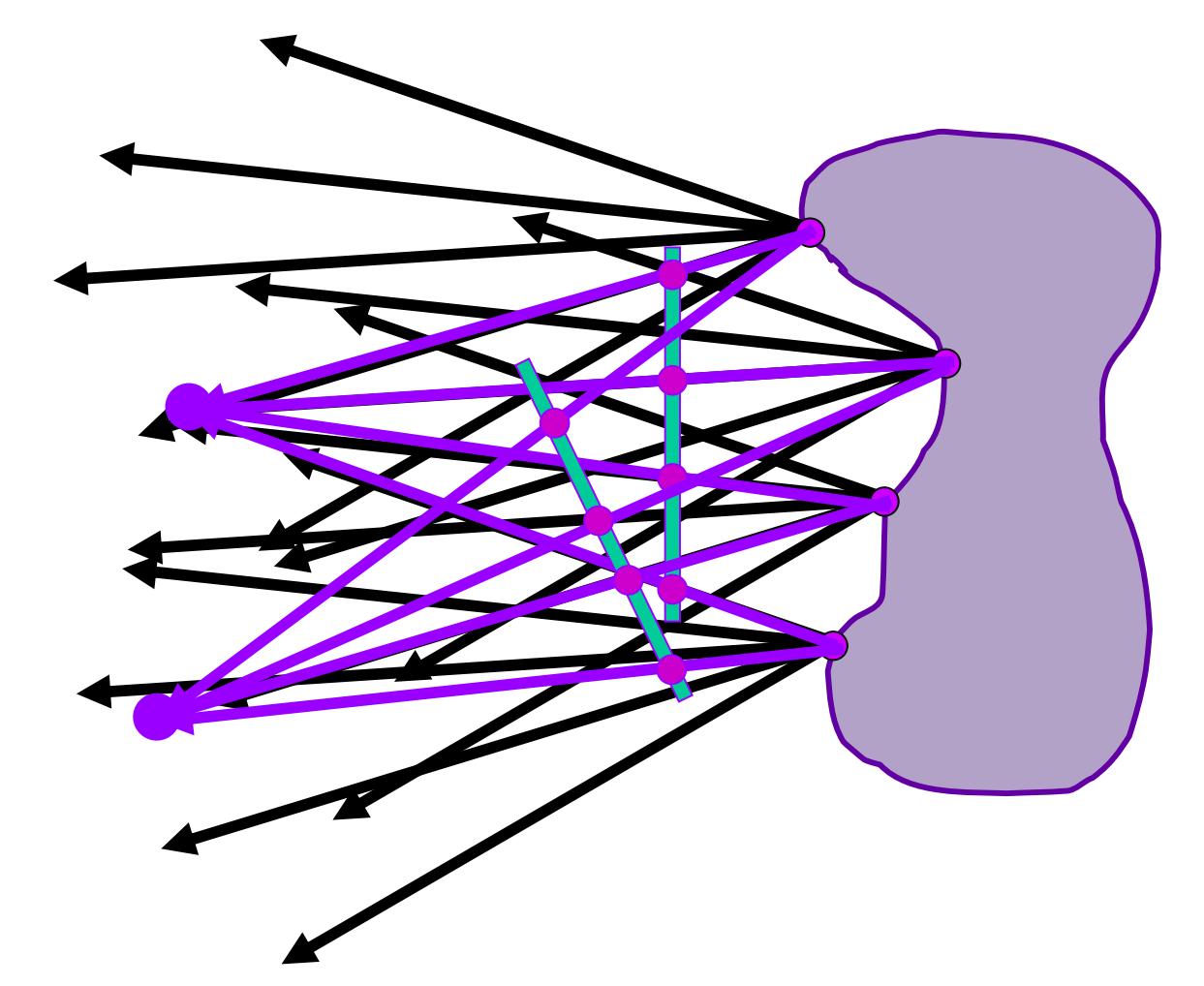

The Plenoptic Surface

Describe the radiance information of an object by 4D rays, which is represented by:

a 2D position (surface coordinate), and

a 2D direction (

View Synthesis

Place a camera at a certain direction, When looking to an object, we know all the radiance information of each ray we have casted, and thus we can synthesize the view simply by:

Looking up the radiance information through the plenoptic function.

Furthermore, we may completely ignore the shape of the object, and solely record the light field.

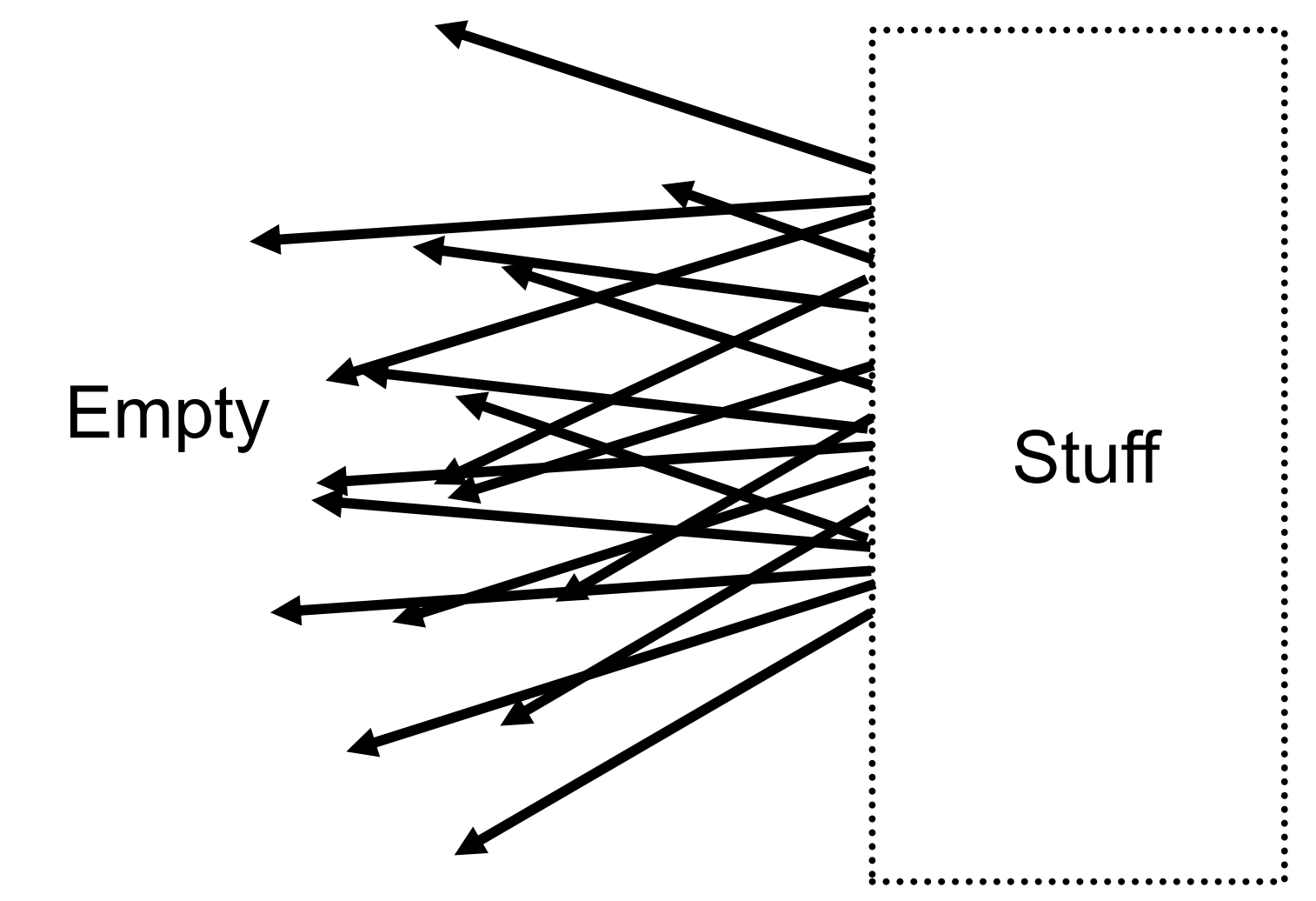

Outside the convex space

Lumigraph

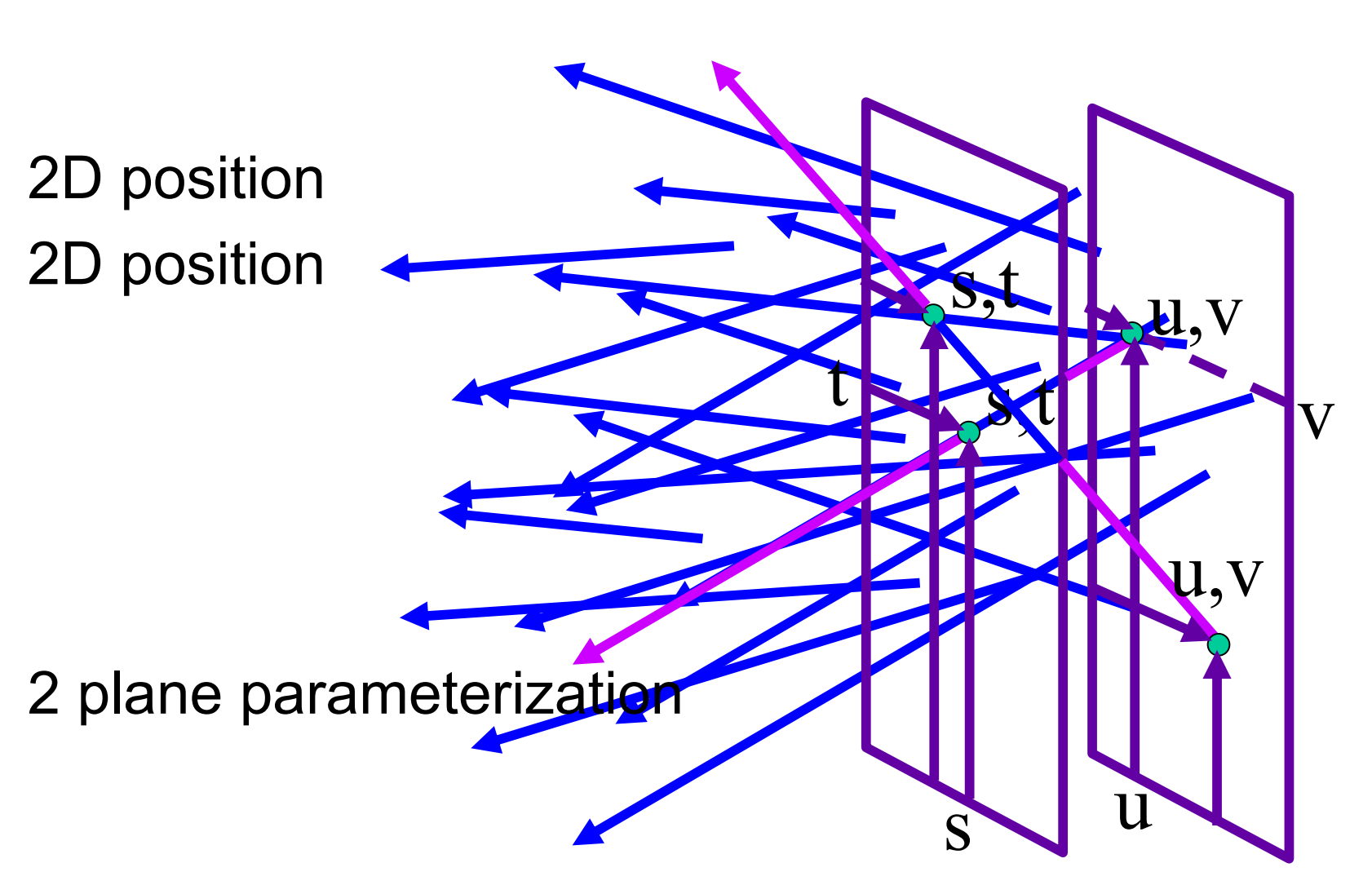

Parameterization

2D Position, 2D Direction:

2-Plane Parameterization: 2D Position, 2D Position:

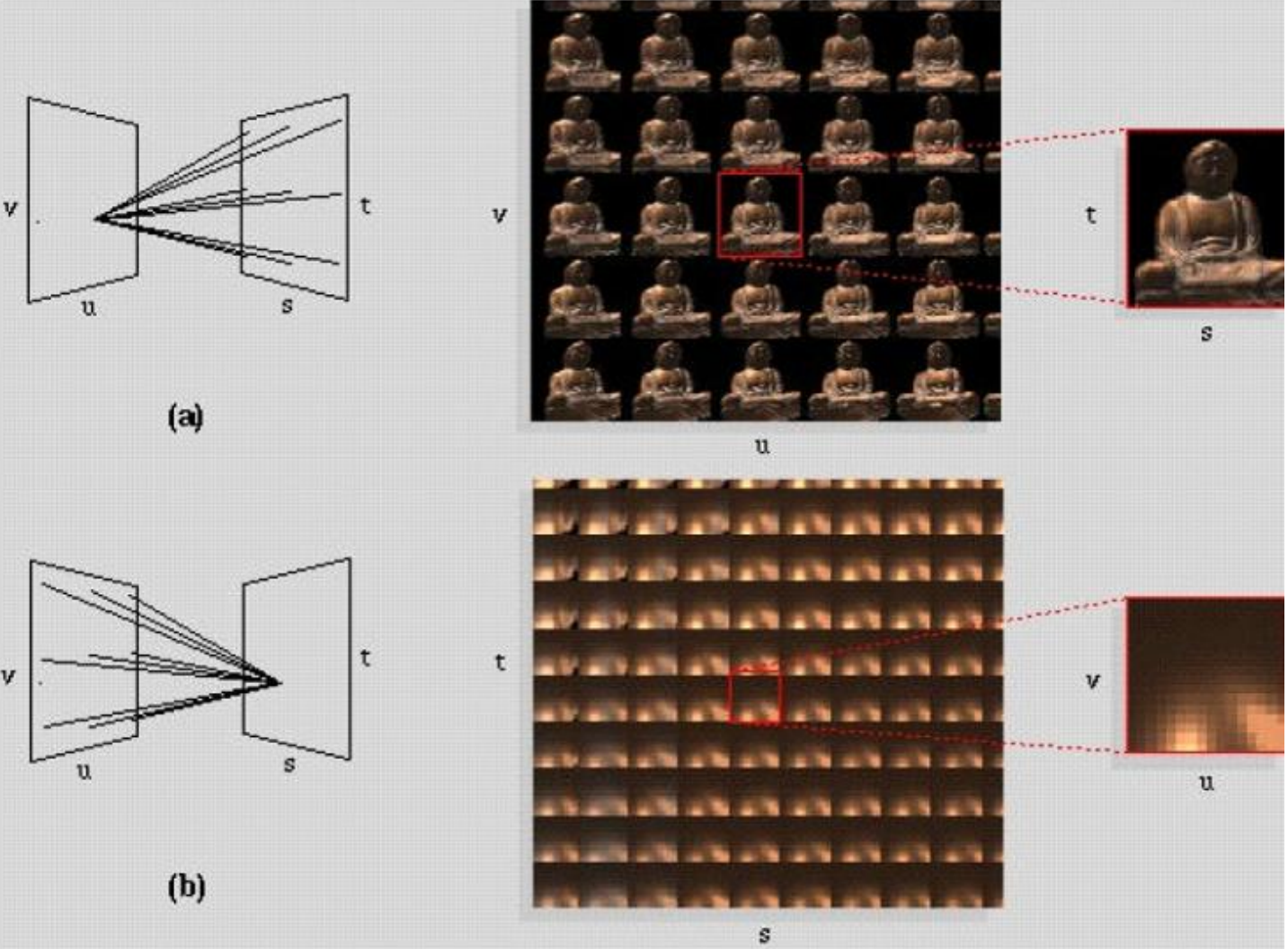

Recording the Lumigraph

Assume we are viewing from left of the

Fix

Fix

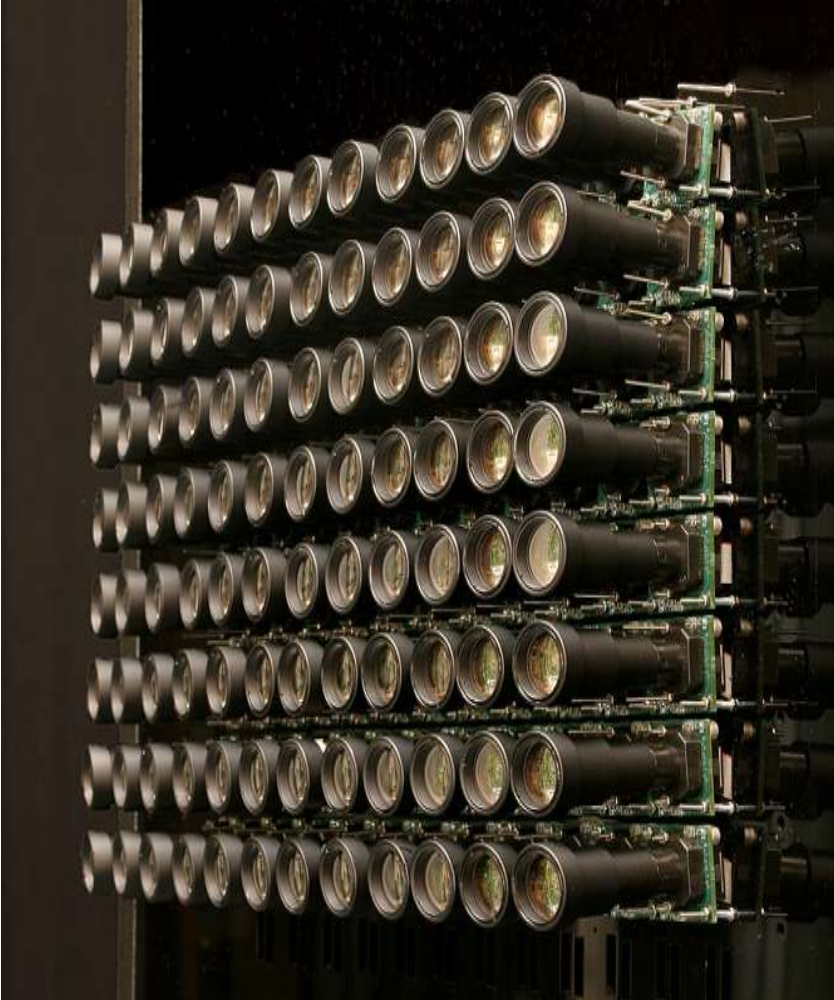

Camera array

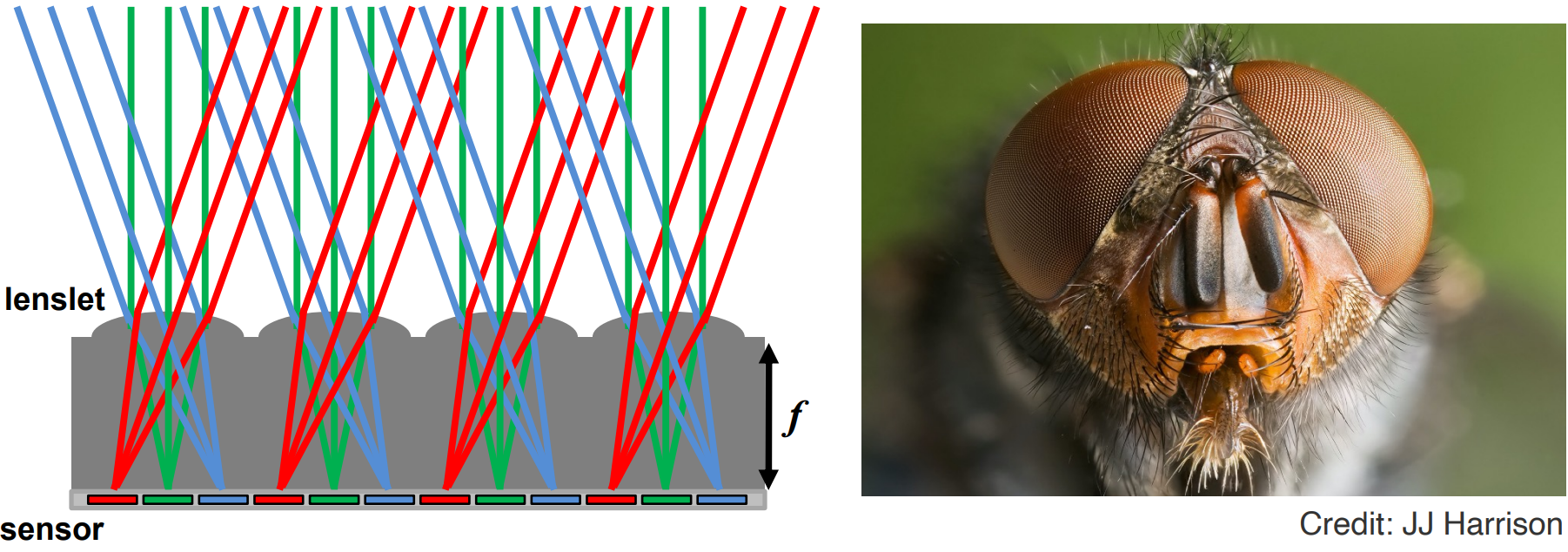

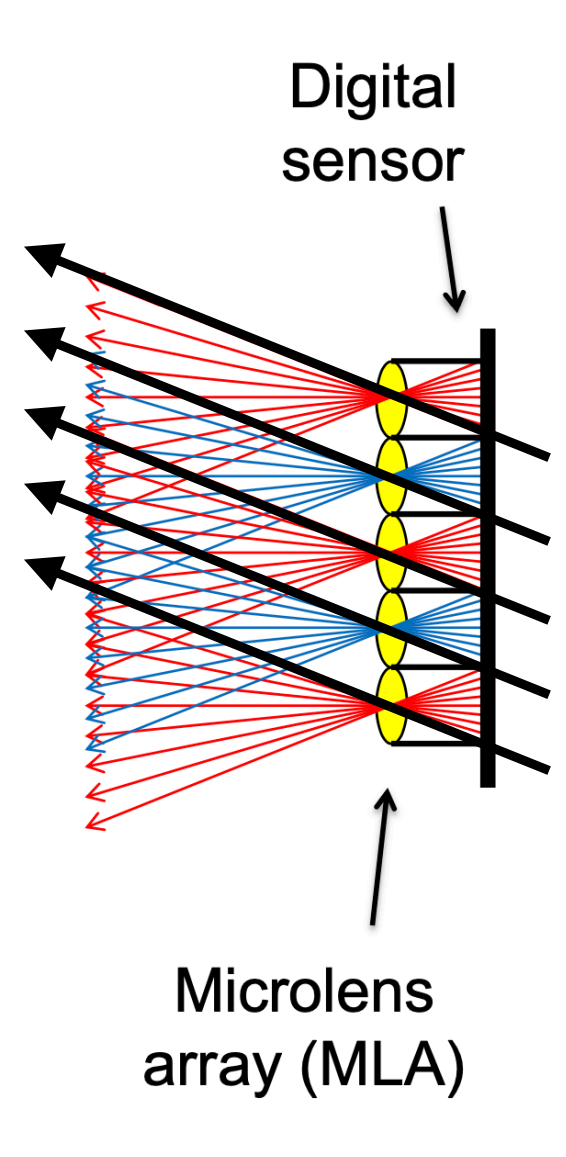

Integral Imaging

Flies record lumigraph of the scene, or radiance.

Spatially-multiplexed light field capture using lenslets.

Trade-off between spatial and angular resolution

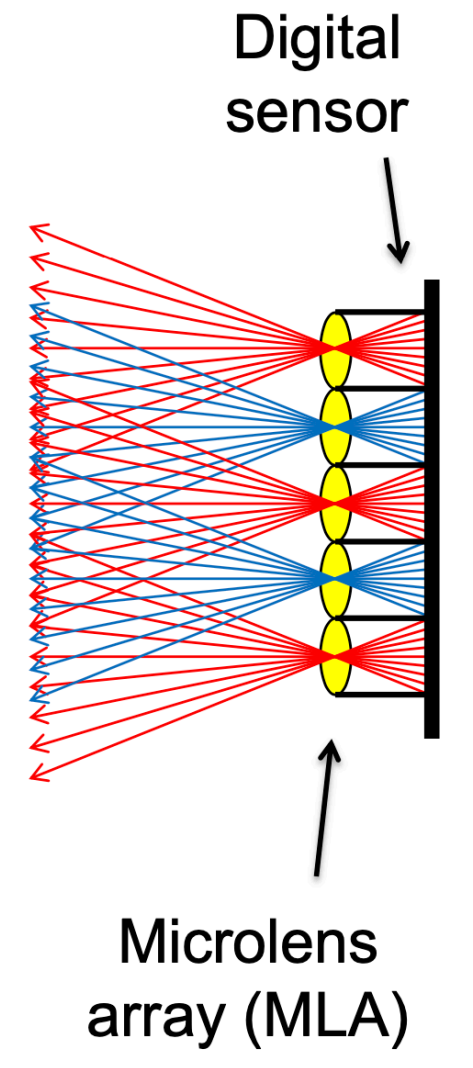

Light Field Camera

Prof. Ren Ng: Founder of the company Lytro, who makes light field cameras.

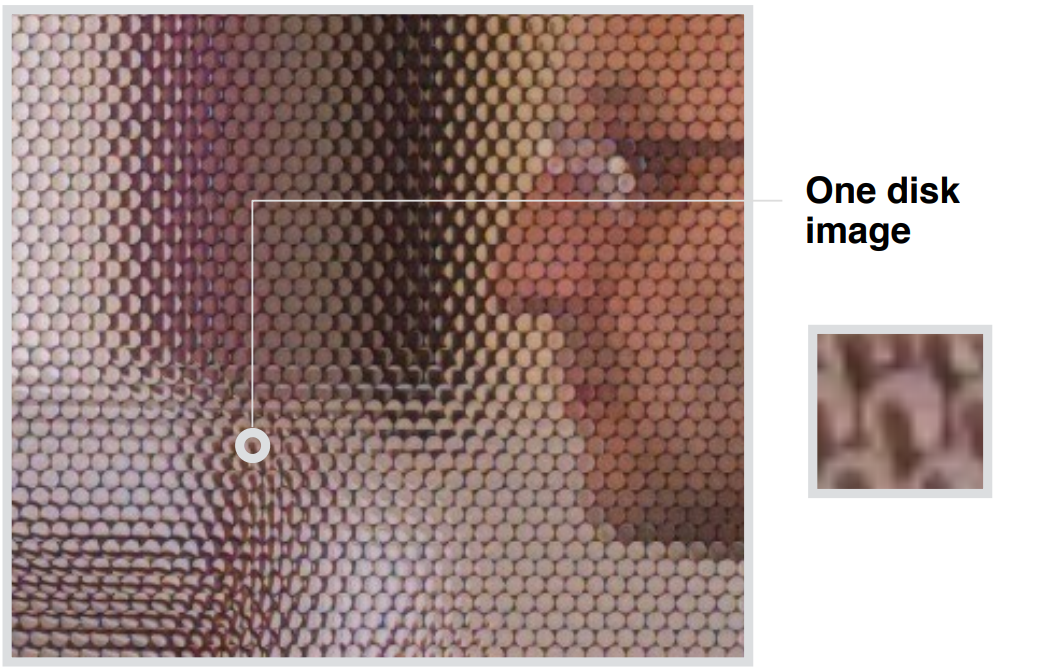

Computational Refocusing: virtually changing focal length, aperture, size, etc., after taking

Picture taken by a light field camera

Each pixel (irradiance) is now stored as a block of pixels (radiance, or irradiance at different directions)

If each disk of "recorded radiance "is averaged as a single pixel, then the resulting picture is the same as what a normal camera would have taken.

Getting a Photo from the Light Field Camera

Moving the camera around: Always choose the pixel at a fixed position in each disk

Computational/Digital Refocusing: Changing focal length, and pick the refocused rays accordingly

Why does it have these functions?

The light field contains everything

Pros & Cons

Insufficient spatial resolution: Same film used for both spatial and directional information

High cost